Install Python Packages on Azure Synapse

This post will be very similar to the last one. But this time, we’ll release our python package to Azure Synapse instead of Databricks. In order to unit test Synapse Notebooks, you’ll have to jump through all sorts of hoops. Why don’t we run our unit tests outside of Synapse, where unit tests are supposed to run (in our DevOps pipeline), and release a tested version of our code right after?

Let’s use the same basic setup as in test python code, then use our knowledge from create python packages to convert our code to a package. And finally we will install the package on our Apache Spark pool.

⟶

⟶

Basic Setup

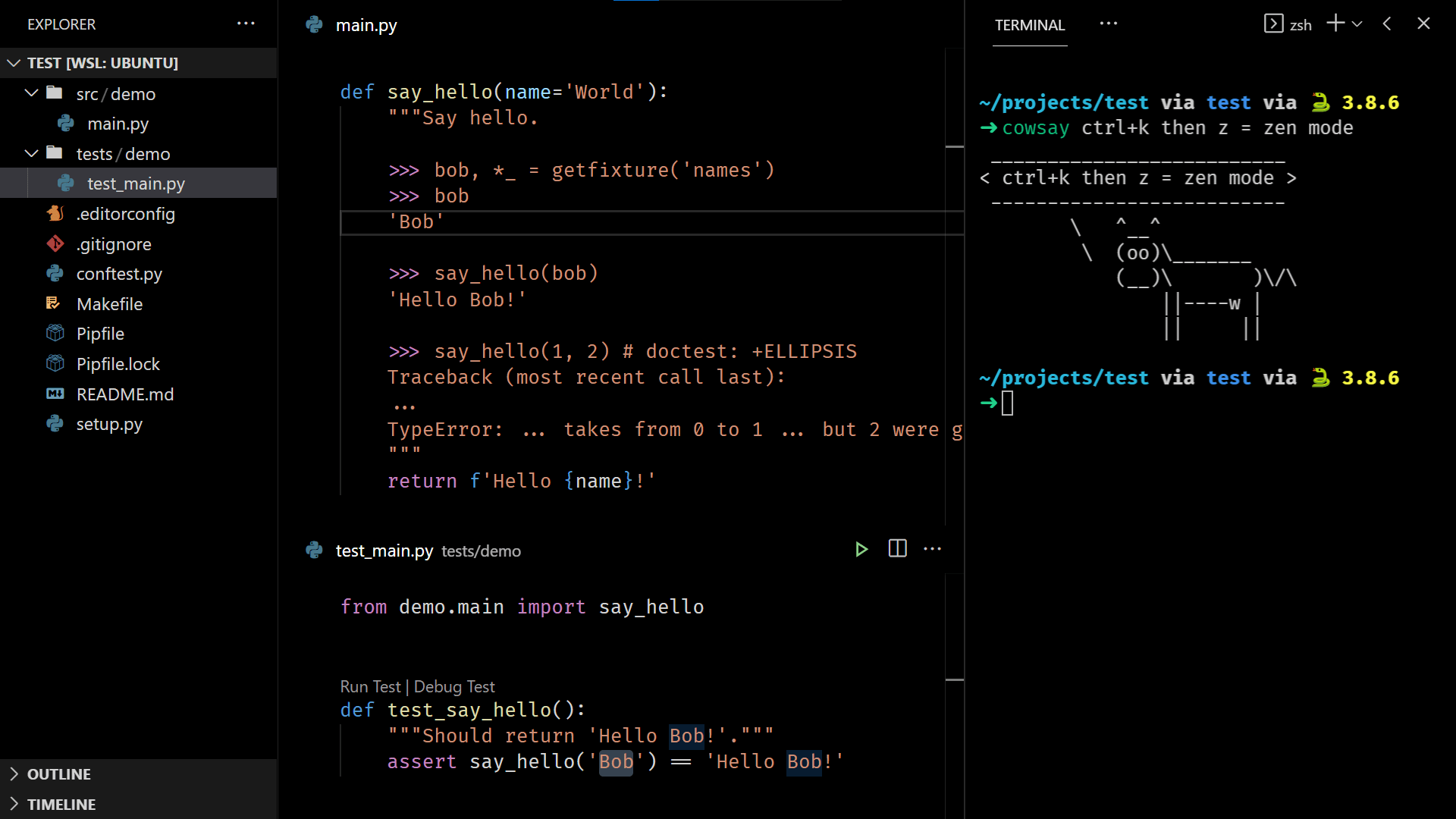

Following the previously mentioned posts, we’d have a setup that looks like this:

Manual Package Installation

In order to run PySpark (Python) cells in Synapse Notebooks, you need to have an Apache Spark pool attached:

You can provide a requirements.txt file during, or after pool creation.

To install custom packages, you simply upload the wheel file into the linked storage account, at a particular location. You will need a “Storage Blob Data Contributor” role assigned to your account to copy files to your storage using the azure cli (alternatively, you can use the old --auth-mode key option).

Insert the following snippet at the top of your Makefile:

.EXPORT_ALL_VARIABLES:

WHEELNAME=demo-0.0.dev0-py3-none-any.whl

SYNAPSE_SA_NAME=mystorageaccount

SYNAPSE_CONTAINER_NAME=mycontainer

SYNAPSE_WORKSPACE_NAME=myworkspace

SYNAPSE_SPARKPOOL_NAME=guiltyspark

SYNAPSE_PACKAGE_PATH=synapse/workspaces/${SYNAPSE_WORKSPACE_NAME}/sparkpools/${SYNAPSE_SPARKPOOL_NAME}/libraries/python/${WHEELNAME}

And append this snippet at the end of your Makefile:

install-package-synapse:

@echo Installing 'dist/${WHEELNAME}' on azure synapse...

az storage fs file upload \

-s dist/${WHEELNAME} \

-p ${SYNAPSE_PACKAGE_PATH} \

-f ${SYNAPSE_CONTAINER_NAME} \

--account-name ${SYNAPSE_SA_NAME} \

--auth-mode login \

--overwrite

Run the make build command in your terminal. Confirm that the file dist/demo-0.0.dev0-py3-none-any.whl has been created:

➜ make build

...

➜ ls dist

demo-0.0.dev0-py3-none-any.whl

Finally, run the new make install-package-synapse command in your terminal to copy the wheel file, and restart the spark pool in synapse.

By adding the copy command to a DevOps release pipeline, you can automatically roll out new (tested) versions of your packaged code, and use them in your Synapse Notebooks.